Andrew Tarantola reports in Engadget:

"The essence of the architectural approach is that it's the structure that frames things. In computers, it provides a language which we can program things in. When talking about cognitive architectures, what are the fixed memories, the learning and decision mechanisms, on top of what you're going to program or teach it.You're trying to understand the structure [of the mind] and how the pieces work together, (which) is critical. So it's not just going to emerge as individual pieces, that fixed structure is an important artifact, and then talk about the knowledge and skills on top of that."

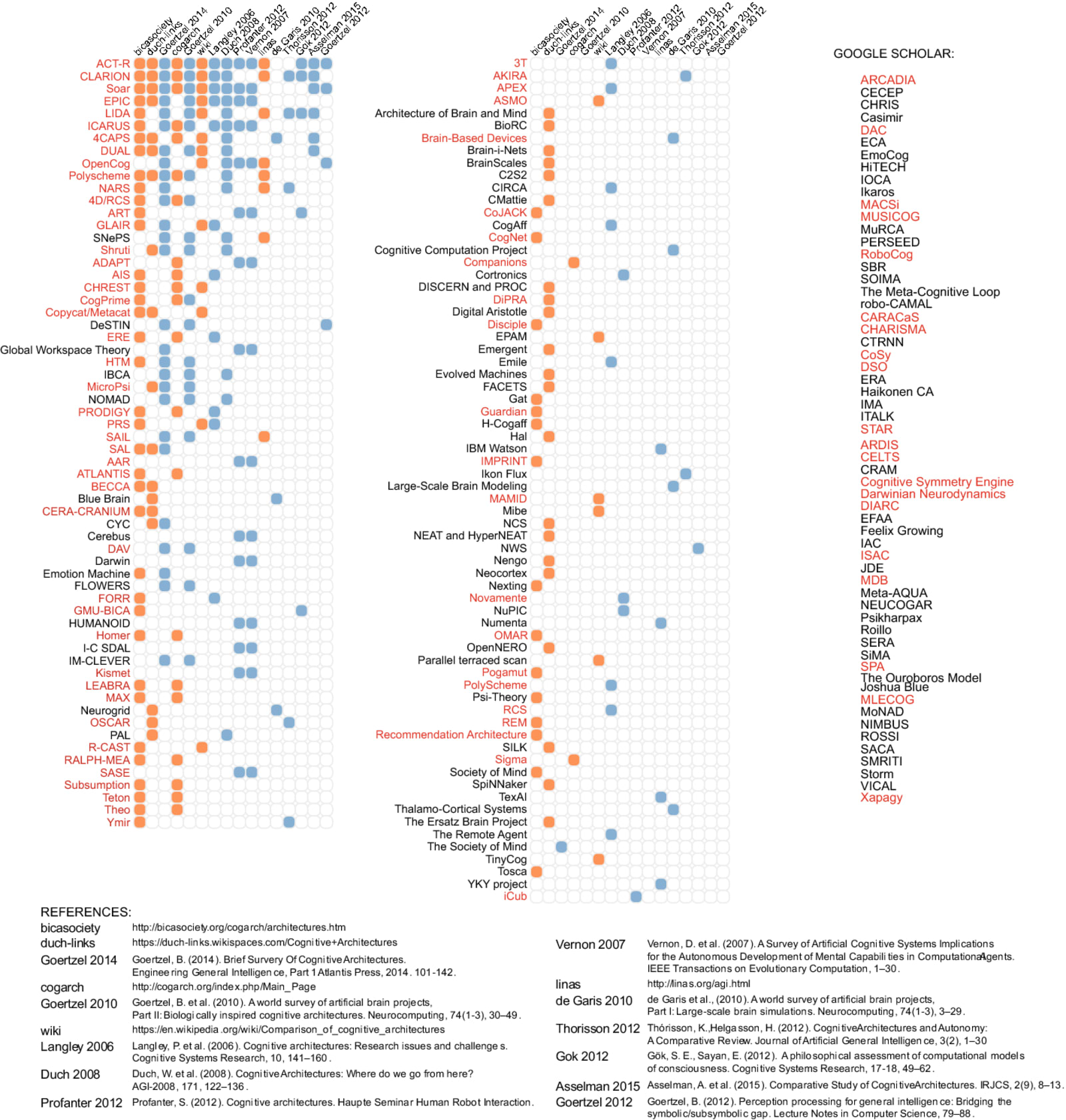

There's no one right way to build a robot, just as there's no singular means of imparting it with intelligence. Last month, Engadget spoke with Carnegie Mellon University associate research professor and the director of the Resilient Intelligent Systems Lab, Nathan Michael, whose work involves stacking and combining a robot's various piecemeal capabilities together as it learns them into an amalgamated artificial general intelligence (AGI). Think, a Roomba that learns how to vacuum, then learns how to mop, then learns how to dust and do dishes -- pretty soon, you've got Rosie from The Jetsons.But attempting to model an intelligence after either the ephemeral human mind or the exact physical structure of the brain (rather than iterating increasingly capable Roombas) is no small task -- and with no small amount of competing hypotheses and models to boot. In fact, a 2010 survey of the field found more than two dozen such cognitive architectures actively being studied.

The current state of AGI research is "a very complex question without a clear answer," Paul S. Rosenbloom, professor of computer science at USC and developer of the Sigma architecture, told Engadget. "There's the field that calls itself AGI which is a fairly recent field that's trying to define itself in contrast to traditional AI." That is, "traditional AI" in this sense is the narrow, single process AI we see around us in our digital assistants and floor-scrubbing maid-bots."None of these fields or sub-fields are necessarily internally consistent or well-organized," John E. Laird, Professor of Engineering at the University of Michigan and developer of the Soar architecture, explained to Engadget. He points out that research in these fields operate as pseudo-confederations, formed mostly by whoever decides to shows up to a specific conference. "It's very difficult to get necessary and sufficient criteria for AGI or any of the sub fields because it's really a social contract of the people who decide to work in the area," he concedes.

Laird notes that in the early days of AGI research, these conferences were dominated not by academics but by hobbyists and amateurs. "There were a lot of people who were interested in AI and what the future might be, but they weren't necessarily active researchers. I think, over the years, that's changed, and the kind of people that go to that conference specifically has changed."

"From a historical perspective," Christian Lebiere, Professor of Psychology at CMU and creator of the ACT-R architecture, added. "AI in the old days, was almost synonymous with Strong AI, which is a strong claim about a pretty broad set of human-like capabilities that could be achieved. People, to some extent, over-promised and under-delivered, so that came to a crashing halt in the early '80s and then, to some extent, the community scaled back to Narrow AI." Paulie's robot butler from Rocky 4 is one such example of this overpromising.

But even among the competing architectures, researchers find plenty of functional overlap. Take ACT-R and Soar for example. "ACT-R is more closely tied to modeling human cognition and also mapping the processing to the brain," Laird explained. In "Soar, we're much more inspired by psychological and sometimes biological structures, and trying to build bigger AI systems." That is, Soar explores "longer timescales, the more high level, cognitive capabilities," Laird continued, while "ACT-R will be at the shorter time scales and more detailed modeling of low level psychological phenomena."

"You can think of Sigma as a spin off Soar, that's trying to learn from Soar, ACT-R and others," Rosenbloom added.

In 2017, they proposed the Standard Model of the Mind, a general reference model which would serve as a "cumulative reference point for the field" that could serve as a guide post for research and application development. "We propose developing such a model for human-like minds, computational entities whose structures and processes are substantially similar to those found in human cognition," the three wrote in AI Magazine.

"If you look at the AGI community," Rosenbloom said, "you'll see everything from attempts to build a single equation that covers all of AI to approaches where we could just pick a whole bunch of convenient technologies and put them together, and hope that they'll yield something interesting." And that's what the Standard Model of the Mind is attempting to codify.

"The essence of the architectural approach is that it's the structure that frames things," Rosenbloom continued. "In computers, it provides a language which we can program things in. When we're talking about cognitive architectures, we're talking about what are the fixed memories, the learning and decision mechanisms, on top of what you're going to program or teach it."

"You're trying to understand the structure [of the mind] and how the pieces work together. Understanding how they work together is a critical piece of it," Rosenbloom said. "So it's not just going to emerge more the individual pieces, you've got actually study that fixed structure as an important artifact, and then talk about the knowledge and skills on top of that."

Laird notes that "there is some evidence that the brain is not just a homogenous bunch of neurons, that at birth somehow starts to congeal into the structures we have in our brain." He argues that perhaps it took so many millennia for intelligent life to emerge on this planet is that it took thousands of generations to create the necessary architecture and underlying structure.

This is partly why these researchers have little interest in modelling lower level intelligences and working their way up to human-level thought processes. That and, "you can't talk to a frog," Laird exclaimed. "We've studied human cognition much more than animal cognition, at least when all this started. So you could say now the more we understand about animals, the more it makes sense to look at that kind of thing. But it didn't necessarily in the past."

This field of study gave rise to the behavioral approach in robotics. Its subsequent popularity, according to Laird at least, proved disastrous to AGI research. "It turns out it's dominated by what we think of as low level issues of perception and locomotion" Lebiere explained. "You're really building physical systems in the world. There is a chance that if you take that approach, you really don't pay much attention and devote resources to most of the cognitive aspects." Basically it's the approach that led us to iterating better Roombas in pursuit of Rosie rather than developing her mind and sticking it in a robotic body.

"The alternative argument is that trying to just do the cognitive is building on a house of sand," Laird countered. "That you really have to have good interaction with the environment through sensing an action."

"At one point of the evolution of the cognitive architecture has been the integration of perception and action together with cognition on an equal footing," Lebiere added.

But even as the bodies and minds of robots learn to grow and develop in tandem, neither Laird, Lebiere, or Rosenbloom are particularly concerned about the singularity or a global coup at the hands of our mechanical constructs.

"There is a set of concerns involving our systems, which are the same concerns you have about any computer system that's doing its own thing," Rosenbloom said. Self-driving vehicles for example, you've got to make sure that they not only work in the real-world environment they're designed for but ensure that they're working as intended. "But the notion is to go beyond that to intelligent systems that are going to try to rule the world," he concluded. "That's a whole different thing than just autonomous vehicle safety."

Lebiere notes a secondary sticking point in the development of AGI. "Because so much of the machine learning side of data driven," he argues, "a lot of the concerns about sort of that kind of AI are privacy concerns about data, rather than in and of itself concerned about the power of the AIs."

But that's not to say that the pursuit of AGI will stagnate any time soon. The field operates in cycles, Rosenbloom explains. Booms occur "when one of these technologies grows out of its narrowness. People are very excited, because all of a sudden, you can do things you couldn't do before." We saw similar a boom with Siri and other digital assistants. However once people realize the limitations of these new technologies, no matter how much further they were compared to before, people will eventually grow disenchanted with them and the cycle resets. But which AI-inspired technology will take off next remains anybody's guess.

0 comments:

Post a Comment