But the real issue, as the following article explains, may be that we're missing the point. That technology is just a tool. It's how we apply it and train people to use it, adapt our organizations to optimize it and manage the process by which it is operated that really matters. It is, in other words, the manner in which intangible inputs like leadership impact tangible results that determines technology's true performance. JL

Ezra Klein reports in Vox:

Productivity numbers miss innovation gains and quality improvements - but they’ve always miss(ed) that. Prove Facebook offers more surplus than cars; that inflation tracked the change from outhouses to toilets better than from telephones to smartphones. Real value to the economy wasn't sales of automobiles, but the way they revolutionized our work and lives. Productivity increases come from companies whose competency isn't technology (but) how to apply it. The hardest things isn’t building the technology but getting people to use it properly

What do you think of when you hear the word "technology"? Do you think of jet planes and laboratory equipment and underwater farming? Or do you think of smartphones and machine-learning algorithms?

Venture capitalist Peter Thiel guesses it's the latter. When a grave-faced announcer on CNBC says "technology stocks are down today," we all know he means Facebook and Apple, not Boeing and Pfizer. To Thiel, this signals a deeper problem in the American economy, a shrinkage in our belief of what's possible, a pessimism about what is really likely to get better. Our definition of what technology is has narrowed, and he thinks that narrowing is no accident. It's a coping mechanism in an age of technological disappointment.

"Technology gets defined as 'that which is changing fast,'" he says. "If the other things are not defined as 'technology,' we filter them out and we don't even look at them."

Thiel isn't dismissing the importance of iPhones and laptops and social networks. He founded PayPal and Palantir, was one of the earliest investors in Facebook, and now sits atop a fortune estimated in the billions. We spoke in his sleek, floor-to-ceiling-windowed apartment overlooking Manhattan — a palace built atop the riches of the IT revolution. But it's obvious to him that we're living through an extended technological stagnation. "We were promised flying cars; we got 140 characters," he likes to say.

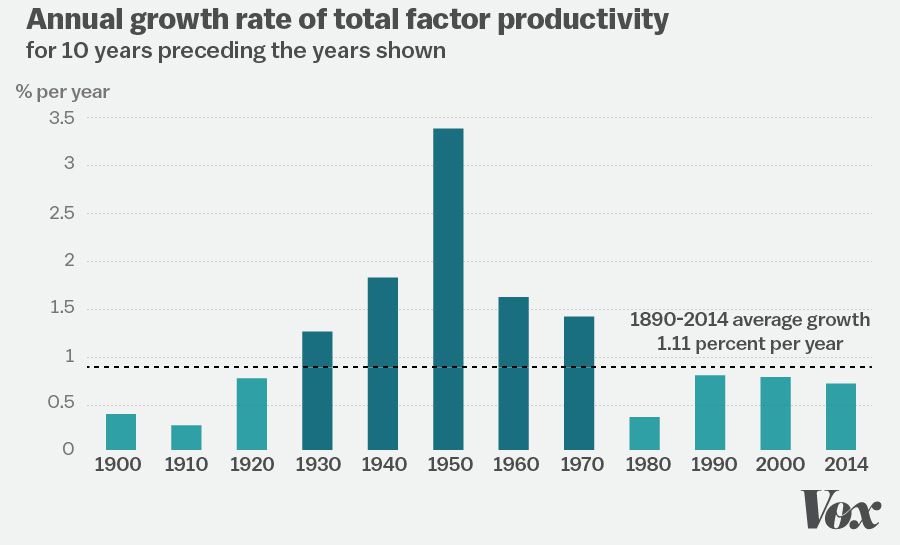

The numbers back him up. The closest the economics profession has to a measure of technological progress is an indicator called total factor productivity, or TFP. It's a bit of an odd concept: It measures the productivity gains left over after accounting for the growth of the workforce and capital investments.

When TFP is rising, it means the same number of people, working with the same amount of land and machinery, are able to make more than they were before. It's our best attempt to measure the hard-to-define bundle of innovations and improvements that keep living standards rising. It means we're figuring out how to, in Steve Jobs's famous formulation, work smarter. If TFP goes flat, then so do living standards.

And TFP has gone flat — or at least flatter — in recent decades. Since 1970, TFP has grown at only about a third the rate it grew from 1920 to 1970. If that sounds arid and technical, then my mistake: It means we're poorer, working longer hours, and leaving a worse world for our grandchildren than we otherwise would be. The 2015 Economic Report of the President noted that if productivity growth had continued to roar along at its 1948–1973 pace, the average household's income would be $30,000 higher today.

What Thiel can't quite understand is why his fellow founders and venture capitalists can't see what he sees, why they're so damn optimistic and self-satisfied amidst an obvious, rolling disaster for human betterment.

Maybe, he muses, it's simple self-interest at work; the disappointments elsewhere in the economy have made Silicon Valley richer, more important, and more valued. With so few other advances competing for press coverage and investment dollars, the money and the prestige flow into the one sector of the economy that is pushing mightily forward. "If you're involved in the IT sector, you're like a farmer in the midst of a famine," Thiel says. "And being a farmer in a famine may actually be a very lucrative thing to be."

Or maybe it's mere myopia. Maybe the progress in our phones has distracted us from the stagnation in our communities. "You can look around you in San Francisco, and the housing looks 50, 60 years old," Thiel continues. "You can look around you in New York City and the subways are 100-plus years old. You can look around you on an airplane, and it's little different from 40 years ago — maybe it's a bit slower because the airport security is low-tech and not working terribly well. The screens are everywhere, though. Maybe they're distracting us from our surroundings rather than making us look at our surroundings."

But Thiel's peers in Silicon Valley have a different, simpler explanation. To many of them, the numbers are simply wrong.

What Larry Summers doesn't understand

If there was any single inspiration for this article, it was a speech Larry Summers gave at the Hamilton Project in February 2015. Summers is known for his confident explanations of economic phenomena, not his befuddlement. But that day, he was befuddled.

"On the one hand," he began, "we have enormous anecdotal evidence and visual evidence that points to technology having huge and pervasive effects."

Call this the but everybody knows it argument. Everybody knows technological innovation is reshaping the world faster than ever before. The proof is in our pockets, which now contain a tiny device that holds something close to the sum of humanity's knowledge, and it's in our children, who spend all day staring at screens, and it's in our stock market, where Apple and Google compete for the highest valuation of any company on Earth. How can anyone look at all this and doubt that we live in an age dominated by technological wonders?

"On the other hand," Summers continued, "the productivity statistics on the last dozen years are dismal. Any fully satisfactory view has to reconcile those two observations, and I have not heard it satisfactorily reconciled."

Many in Silicon Valley have a simple way of reconciling those views. The productivity statistics, they say, are simply broken.

"While I am a bull on technological progress," tweeted venture capitalist Marc Andreessen, "it also seems that much that progress is deflationary in nature, so even rapid tech may not show up in GDP or productivity stats."

Hal Varian, the chief economist at Google, is also a skeptic. "The question is whether [productivity] is measuring the wrong things," he told me.

Bill Gates agrees. During our conversation, he rattled off a few of the ways our lives have been improved in recent years — digital photos, easier hotel booking, cheap GPS, nearly costless communication with friends. "The way the productivity figures are done isn't very good at capturing those quality of service–type improvements," he said.

There's much to be said for this argument. Measures of productivity are based on the sum total of goods and services the economy produces for sale. But many digital-era products are given away for free, and so never have an opportunity to show themselves in GDP statistics.

Take Google Maps. I have a crap sense of direction, so it's no exaggeration to say Google Maps has changed my life. I would pay hundreds of dollars a year for the product. In practice, I pay nothing. In terms of its direct contribution to GDP, Google Maps boosts Google's advertising business by feeding my data back to the company so they can target ads more effectively, and it probably boosts the amount of money I fork over to Verizon for my data plan. But that's not worth hundreds of dollars to Google, or to the economy as a whole. The result is that GDP data might undercount the value of Google Maps in a way it didn't undercount the value of, say, Garmin GPS devices.

This, Varian argues, is a systemic problem with the way we measure GDP: It's good at catching value to businesses but bad at catching value to individuals. "When GPS technology was adopted by trucking and logistics companies, productivity in that sector basically doubled," he says. "[Then] the price goes down to basically zero with Google Maps. It’s adopted by households. So it's reasonable to believe household productivity has gone up. But that's not really measured in our productivity statistics."

The gap between what I pay for Google Maps and the value I get from it is called "consumer surplus," and it's Silicon Valley's best defense against the grim story told by the productivity statistics. The argument is that we've broken our country's productivity statistics because so many of our great new technologies are free or nearly free to the consumer. When Henry Ford began pumping out cars, people bought his cars, and so their value showed up in GDP. Depending on the day you check, the stock market routinely certifies Google — excuse me, Alphabet — as the world's most valuable company, but few of us ever cut Larry Page or Sergei Brin a check.

This is what Andreessen means when he says Silicon Valley's innovations are "deflationary in nature": Things like Google Maps are pushing prices down rather than pushing them up, and that's confounding our measurements.

The other problem the productivity skeptics bring up are so-called "step changes" — new goods that represent such a massive change in human welfare that trying to account for them by measuring prices and inflation seems borderline ridiculous. The economist Diane Coyle puts this well. In 1836, she notes, Nathan Mayer Rothschild died from an abscessed tooth. "What might the richest man in the world at the time have paid for an antibiotic, if only they had been invented?" Surely more than the actual cost of an antibiotic.

Perhaps, she suggests, we live in an age of step changes — the products we use are getting so much better, so much faster, that the normal ways we try to account for technological improvement are breaking down. "It is not plausible that the statistics capture the step changes in quality of life brought about by all of the new technologies," she writes, "any more than the price of an antibiotic captures the value of life."

"Yes, productivity numbers do miss innovation gains and quality improvements," sighs John Fernald, an economist at the San Francisco Federal Reserve Bank who has studied productivity statistics extensively. "But they’ve always been missing that."

One problem with the mismeasurement hypothesis: There's always been mismeasurement

This is a challenge to the mismeasurement hypothesis: We've never measured productivity perfectly. We've always been confounded by consumer surplus and step changes. To explain the missing productivity of recent decades, you have to show that the problem is getting worse — to show the consumer surplus is getting bigger and the step changes more profound. You have to prove that Facebook offers more consumer surplus than cars once did; that measures of inflation tracked the change from outhouses to toilets better than the change from telephones to smartphones. That turns out to be a very hard case to make.

Consider Google Maps again. It's true that using the app is free. But the productivity gains it enables should show in other parts of the economy. If we are getting places faster and more reliably, that should allow us to make more things, have more meetings, make more connections, create more value. That's how it was for cars and trains — their real value to the economy wasn't simply sales of automobiles or tickets or gasoline, but the way they revolutionized our work and lives.

Or take Coyle's point about the step change offered by antibiotics. Is there anything in our recent history that even remotely compares to the medical advances of the 20th century? Or the sanitation advances of the late 19th century? If so, it's certainly not evident in our longevity data: Life expectancy gains have slowed sharply in the IT era. A serious appreciation of step changes suggests that our measures of productivity might have missed more in the 20th century than they have in the 21st.

Another problem with the mismeasurement hypothesis: It doesn't fit the facts

The mismeasurement hypothesis fails more specific tests, too. In January, Chad Syverson, an economist at the University of Chicago's Booth School of Business, published a paper that is, in the understated language of economics research, a devastating rebuttal to the thesis.

Syverson reasoned that if productivity gains were being systematically distorted in economies dependent on informational technologies, then productivity would look better in countries whose economies were driven by other sectors. Instead, he found that the productivity slowdown — which is evident in every advanced economy — is "unrelated to the relative size of information and communication technologies in the country’s economy."

Then he moved on to the consumer surplus argument. Perhaps the best way to value the digital age's advances is by trying to put a price on the time we spend using things like Facebook. Syverson used extremely generous assumptions about the value of our time, and took as a given that we would use online services even if we had to pay for them. Even then, he found the consumer surplus only fills a third of the productivity gap. (And that's before you go back and offer the same generous assumptions to fully capture the value of past innovations, which would widen the gap today's technologies need to close!)

A March paper from David Byrne, John Fernald, and Marshall Reinsdorf took a different approach but comes to similar conclusions. "The major 'cost' to consumers of Facebook, Google, and the like is not the broadband access, the cell phone service, or the phone or computer; rather, it is the opportunity cost of time," they concluded. "But that time cost ... is akin to the consumer surplus obtained from television (an old economy invention) or from playing soccer with one’s children."

There is real value in playing soccer with one's children, of course — it's just not the kind of value economists are looking to measure with productivity statistics.

This is a key point, and one worth dwelling on: When economists measure productivity gains, they are measuring the kind of technological advances that power economic gains. What that suggests is that even if we were mismeasuring productivity, we would see the effects of productivity-enhancing technological change in other measures of economic well-being.

You can imagine a world in which wages look flat but workers feel richer because their paychecks are securing them wonders beyond their previous imagination. In that world, people's perceptions of their economic situation, the state of the broader economy, and the prospects for their children would be rosier than the economic data seemed to justify. That is not the world we live in.

According to the Pew Research Center, the last time a majority of Americans rated their own financial condition as "good or excellent" was 2005. Gallup finds that the last time most Americans were satisfied with the way things were going in the country was 2004. The last time Americans were confident that their children's lives would be better than their own was 2001. Hell, Donald Trump is successfully running atop the slogan "Make America Great Again" — the "again" suggests people many don't feel their lives are getting better and better.

Why isn't all this technology improving the economy? Because it's not changing how we work.

There's a simple explanation for the disconnect between how much it feels like technology has changed our lives and how absent it is from our economic data: It's changing how we play and relax more than it's changing how we work and produce.

As my colleague Matthew Yglesias has written, "Digital technology has transformed a handful of industries in the media/entertainment space that occupy a mindshare that's out of proportion to their overall economic importance. The robots aren't taking our jobs; they're taking our leisure."

"Data from the American Time Use Survey," he continues, "suggests that on average Americans spend about 23 percent of their waking hours watching television, reading, or gaming. With Netflix, HDTV, Kindles, iPads, and all the rest, these are certainly activities that look drastically different in 2015 than they did in 1995 and can easily create the impression that life has been revolutionized by digital technology."

But as Yglesias notes, the entertainment and publishing industries account for far less than 23 percent of the workforce. Retail sales workers and cashiers make up the single biggest tranche of American workers, and you only have to enter your nearest Gap to see how little those jobs have changed in recent decades. Nearly a tenth of all workers are in food preparation, and even the most cursory visit to the kitchen of your local restaurant reveals that technology hasn't done much to transform that industry, either.

This is part of the narrowing of what counts as the technology sector. "If you were an airplane pilot or a stewardess in the 1950s," Thiel says, "you felt like you were part of a futuristic industry. Most people felt like they were in futuristic industries. Most people had jobs that had not existed 40 or 50 years ago."

Today, most of us have jobs that did exist 40 or 50 years ago. We use computers in them, to be sure, and that's a real change. But it's a change that mostly happened in the 1990s and early 2000s, which is why there was a temporary increase in productivity (and wages, and GDP) during that period.

The question going forward is whether we're in a temporary technological slowdown or a permanent one.

The scariest argument economist Robert Gordon makes is also the most indisputable argument he makes: There is no guarantee of continual economic growth. It's the progress of the 20th century, not the relative sluggishness of the past few decades, that should surprise us.

The case for pessimism: The past 200 years were unique in human history

The economic historian Angus Maddison, who died in 2010, estimated that the annual economic growth rate in the Western world from AD 1 to AD 1820 was 0.06 percent per year — a far cry from the 2 to 3 percent we've grown accustomed to in recent decades.

The superpowered growth of recent centuries is the result of extraordinary technological progress — progress of a type and pace unknown in any other era in human history. The lesson of that progress, Gordon writes in The Rise and Fall of American Growth, is simple: "Some inventions are more important than others," and the 20th century happened to collect some really, really important inventions.

It was in the 19th and particularly 20th centuries that we really figured out how to use fossil fuels to power, well, pretty much everything. "A newborn child in 1820 entered a world that was almost medieval," writes Gordon, "a dim world lit by candlelight, in which folk remedies treated health problems and in which travel was no faster than that possible by hoof or sail."

That newborn's great-grandchildren knew a world transformed:

When electricity made it possible to create light with the flick of a switch instead of the strike of a match, the process of creating light was changed forever. When the electric elevator allowed buildings to extend vertically instead of horizontally, the very nature of land use was changed, and urban density was created. When small electric machines attached to the floor or held in the hand replaced huge and heavy steam boilers that transmitted power by leather or rubber belts, the scope for replacing human labor with machines broadened beyond recognition. And so it was with motor vehicles replacing horses as the primary form of intra-urban transportation; no longer did society have to allocate a quarter of its agricultural land to support the feeding of the horses or maintain a sizable labor force for removing their waste.Then, of course, there were the medical advances of the age: sanitation, anesthetic, antibiotics, surgery, chemotherapy, antidepressants. Many of the deadliest scourges of the 18th century were mere annoyances by the 20th century. Some, like smallpox, were eliminated altogether. Nothing improves a person's economic productivity quite like remaining alive.

More remarkable was how fast all this happened. "Though not a single household was wired for electricity in 1880, nearly 100 percent of U.S. urban homes were wired by 1940, and in the same time interval the percentage of urban homes with clean running piped water and sewer pipes for waste disposal had reached 94 percent," Gordon writes. "More than 80 percent of urban homes in 1940 had interior flush toilets, 73 percent had gas for heating and cooking, 58 percent had central heating, and 56 percent had mechanical refrigerators."

Gordon pushes back on the idea that he is a pessimist. He does not dismiss the value of laptop computers and GPS and Facebook and Google and iPhones and Teslas. He's just saying that the stack of them doesn't amount to electricity plus automobiles plus airplanes plus antibiotics plus indoor plumbing plus skyscrapers plus the Interstate Highway System.

But it is hard not to feel pessimistic when reading him. Gordon does not just argue that today’s innovations fall short of yesterday’s. He also argues, persuasively, that the economy is facing major headwinds in the coming years that range from an aging workforce to excessive regulations to high inequality. Our innovations will have to overcome all that, too.

The case for optimism

Gordon's views aren't universally held, to say the least. When I asked Bill Gates about The Rise and Fall of American Growth, he was unsparing. "That book will be viewed as quite ironic," he replied. "It's like the 'peace breaks out' book that was written in 1940. It will turn out to be that prophetic."

Gates's view is that the past 20 years have been an explosion of scientific advances. Over the course of our conversation, he marveled over advances in gene editing, machine learning, antibody design, driverless cars, material sciences, robotic surgery, artificial intelligence, and more.

Those discoveries are real, but they take time to turn up in new products, in usable medical treatments, in innovative startups. "We will see the dramatic effects of those things over the next 20 years, and I say that with incredible confidence," Gates says.

And while Gordon is right about the headwinds we face, there are tailwinds, too. We don't appear to be facing the world wars that overwhelmed the 20th century, and we have billions more people who are educated, connected, and working to invent the future than we did 100 years ago. The ease with which a researcher at Stanford and a researcher in Shanghai can collaborate must be worth something.

In truth, I don't have any way to adjudicate an argument over the technologies that will reshape the world 20 or 40 years from now. But if you're focused on gains over the next five, 10 or even 20 years — and for people who need help soon, those are the gains that matter — then we've probably got all the technology we need. What we're missing is everything else.

By 1989, computers were fast becoming ubiquitous in businesses and homes. They were dramatically changing how any number of industries — from journalism to banking to retail — operated. But it was hard to see the IT revolution when looking at the economic numbers. The legendary growth economist Robert Solow quipped, "You can see the computer age everywhere but in the productivity statistics."

Will there be a second IT boom?

It didn't stay that way for long: The IT revolution powered a productivity boom from 1995 to 2004.

The lesson here is simple and profound: Productivity booms often lag behind technology. As Chad Syverson has documented, the same thing happened with electricity. Around the turn of the 20th century, electricity changed lives without really changing the economy much. Then, starting in 1915, there was a decade-long acceleration in productivity as economic actors began grafting electricity onto their operations. That boom, however, quickly tapered off.

But in the case of electrification, there was a second productivity boom that arrived sometime later. This was the boom that emerged as factories, companies and entire industries were rebuilt around the possibilities of electricity — the boom that only came as complex organizations figured out how electricity could transform their operations. "History shows that productivity growth driven by general purpose technologies can arrive in multiple waves," writes Syverson.

Could the same be true for IT?

Tyler Cowen, an economist at George Mason University and author of The Great Stagnation, believes so. "I think the internet is just beginning, even though that sounds crazy."

Phase one, he argues, was the internet as "an add-on." This is Best Buy letting you order stereos from a website, or businesses using Facebook ads to target customers. This is big companies eking out some productivity gains by adding some IT on to their existing businesses.

Phase two, he says, will be new companies built top to bottom around IT — and these companies will use their superior productivity to destroy their competitors, revolutionize industries, and push the economy forward. Examples abound: Think Amazon hollowing out the retail sector, Uber disrupting the taxi cab industry, or Airbnb taking on hotels. Now imagine that in every sector of the economy — what happens if Alphabet rolls out driverless cars powered by the reams of data organized in Google Maps, or if telemedicine revolutionizes rural health care, or if MOOCs (massive open online courses) can truly drive down the cost of higher education?

These are the big leaps forward — and in most cases, we have, or will soon have, the technology to make them. But that doesn't mean they'll get made.

We have the technology. What we need is everything else.

Chris Dixon, a venture capitalist at Andreessen Horowitz, has a useful framework for thinking about this argument. "In 2005, a bunch of companies pitched me the idea for Uber," he says. "But because they followed orthodox thinking, they figured they would build the software but let other people manage the cars."

This was the dominant idea of the add-on phase of the internet: Silicon Valley should make the software, and then it should sell it to companies with expertise in all the other parts of the business. And that worked, for a while. But it could only take IT so far.

"The problem was the following," Dixon continues. "You build the software layer of Uber. Then you knock on the door of the taxi company. They’re a family business. They don’t know how to evaluate, purchase, or implement software. They don't have the budget for it. And even if you can make them a customer, the experience was not very good. Eventually Uber and Lyft and companies like this realized that by controlling the full experience and full product you can create a much better end-user experience." (Dixon's firm, I should note, is an investor in Lyft, though to their everlasting regret, they passed on Uber.)

The point here is that really taking advantage of IT in a company turns out to be really, really hard. The problems aren't merely technical; they're personnel problems, workflow problems, organizational problems, regulatory problems.

The only way to solve those problems (and thus to get the productivity gains from solving them) is to build companies designed to solve those problems. That is, however, a harder, slower process than getting consumers to switch from looking at one screen to looking at another, or to move from renting DVDs to using Netflix.

In this telling, what's holding back our economy isn't so much a dearth of technological advances but a difficulty in turning the advances we already have into companies that can actually use them.

In health care, for instance, there's more than enough technology to upend our relationships with doctors — but a mixture of status quo bias on the part of patients, confusion on the part of medical providers, regulatory barriers that scare off or impede new entrants, and anti-competitive behavior on the part of incumbents means most of us don't even have a doctor who stores our medical records in an electronic form that other health providers can easily access and read. And if we can't even get that done, how are we going to move to telemedicine?

Uber's great innovation wasn't its software so much as its brazenness at exploiting loopholes in taxi regulations and then mobilizing satisfied customers to scare off powerful interest groups and angry local politicians. In the near term, productivity increases will come from companies like Uber — companies whose competency isn't so much technology as it is figuring out how to apply existing technologies to resistant industries.

"It turns out the hardest things at companies isn’t building the technology but getting people to use it properly," Dixon says.

My best guess is that's the answer to the mystery laid out by Summers. Yes, there's new technology all around us, and some of it is pretty important. But developing the technology turns out to be a lot easier than getting people — and particularly companies — to use it properly.

2 comments:

Chris Dixon is half right and half wrong. He is right about, "The problems aren't merely technical; they're personnel problems, workflow problems, organizational problems, regulatory problems."

but he is wrong about, "The only way to solve those problems (and thus to get the productivity gains from solving them) is to build companies designed to solve those problems."

Long established large enterprises are much slower to adopt new technologies for two reasons. 1.) their existing executive leadership is older and typically not strong users of consumer technologies (they have assistants who do that for them), so these executives use their past experiences with technology, which are out dated, to judge and explain why most new technology will not work at their company; 2) Those same executives are reporting to boards that are typically the same age or older and have had the same past experiences (they too have assistants who handle their personal technology), therefore they agree with why most new technologies will not work at their company.

It will take time, but as the leadership and boards in companies passes to younger people who are experienced with consumer technologies, they will force their companies adopt.

The taxi industry may be failing, but Uber and Lyft have not won yet. They are just now starting to learn how hard it is to run a taxi company that is forced to comply with local, state and federal regulation. Who knows, maybe they will evolve back into a technology company providing technology to taxi companies.

Excellent analysis, Chris. Thanks for taking the time and sharing your thoughts.

Post a Comment